一、前期工作

1.导入数据集

import torch

import matplotlib.pyplot as plt

from torchvision import transforms, datasets

import os, PIL, random, pathlib

data_dir = r'D:\P5-data\test'

data_dir = pathlib.Path(data_dir)

data_paths = list(data_dir.glob('*'))

classNames = [str(path).split("\\")[3]for path in data_paths]

print(classNames)

![]()

2.数据集划分

在Windows上,PyTorch的多进程数据加载有一些限制和问题,所以我们使用num_workers=0:在数据加载器创建时,将num_workers参数设置为0,这会禁用多进程数据加载。这是一个简单的解决方法,但可能会降低数据加载的速度。

torchvision.transforms.Compose()详解【Pytorch入门手册】_K同学啊的博客-CSDN博客

train_transforms = transforms.Compose([

transforms.Resize([224, 224]),

transforms.ToTensor(),

transforms.Normalize(

mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

])

test_transform = transforms.Compose([

transforms.Resize([224, 224]),

transforms.ToTensor(),

transforms.Normalize(

mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

])

train_dataset = datasets.ImageFolder("D:/P5-data/train/",transform=train_transforms)

test_dataset = datasets.ImageFolder("D:/P5-data/test/",transform=train_transforms)

batch_size = 32

train_dl = torch.utils.data.DataLoader(train_dataset,

batch_size=batch_size,

shuffle=True,

num_workers=0)

test_dl = torch.utils.data.DataLoader(test_dataset,

batch_size=batch_size,

shuffle=True,

num_workers=0)

关于批次大小的选择

需要根据特定问题和数据集的特征进行调整。一般来说,常见的批次大小值为32、64、128等。选择批次大小时,建议进行实验并监测训练和验证性能,以找到适合特定任务的最佳值。

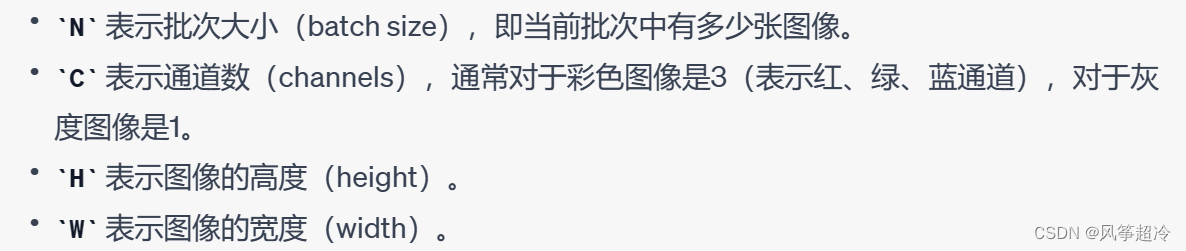

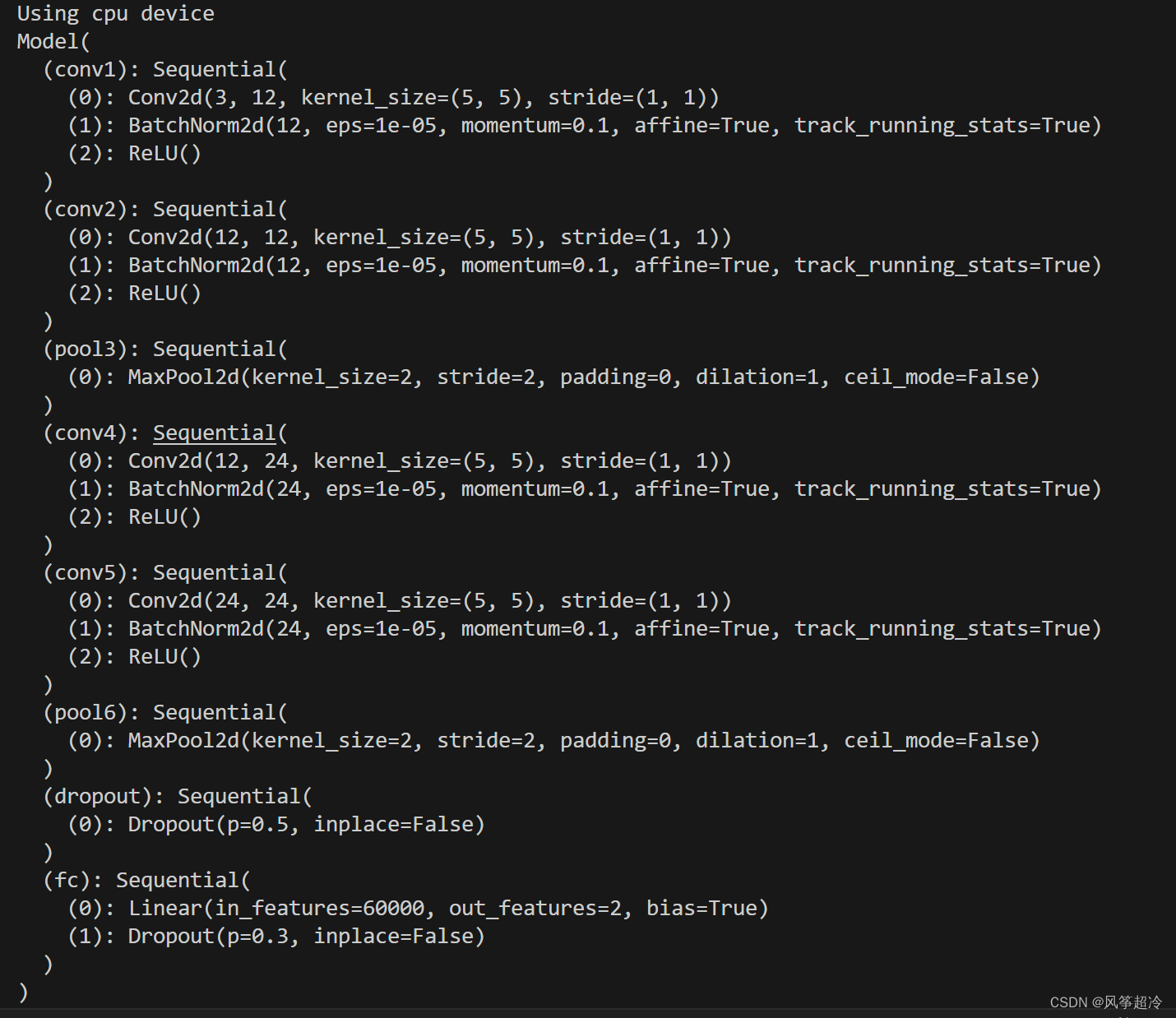

3.检查数据

for images, labels in test_dl:

print("Shape of images [N, C, H, W]: ", images.shape)

print("Shape of labels: ", labels.shape, labels.dtype)

break

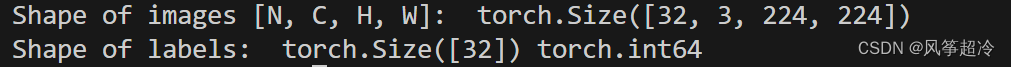

二、构建神经网络

import torch.nn as nn

import torch.nn.functional as F

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.conv1=nn.Sequential(

nn.Conv2d(3, 12, kernel_size=5, padding=0),

nn.BatchNorm2d(12),

nn.ReLU()

)

self.conv2=nn.Sequential(

nn.Conv2d(12, 12, kernel_size=5, padding=0),

nn.BatchNorm2d(12),

nn.ReLU()

)

self.pool3=nn.Sequential(

nn.MaxPool2d(2)

)

self.conv4=nn.Sequential(

nn.Conv2d(12, 24, kernel_size=5, padding=0),

nn.BatchNorm2d(24),

nn.ReLU()

)

self.conv5=nn.Sequential(

nn.Conv2d(24, 24, kernel_size=5, padding=0),

nn.BatchNorm2d(24),

nn.ReLU()

)

self.pool6=nn.Sequential(

nn.MaxPool2d(2)

)

self.dropout=nn.Sequential(

nn.Dropout(0.5)

)

self.fc=nn.Sequential(

nn.Linear(24*50*50, len(classNames)),

nn.Dropout(0.3)

)

def forward(self, x):

batch_size = x.size(0)

x = self.conv1(x)

x = self.conv2(x)

x = self.pool3(x)

x = self.conv4(x)

x = self.conv5(x)

x = self.pool6(x)

x = self.dropout(x)

# print(x.shape)

x = x.view(batch_size, -1) # flatten 编程全连接网络需要的输入(batch, 24*50*50_4

x = self.fc(x)

x = self.dropout(x)

return x

device = "cuda" if torch.cuda.is_available() else "cpu"

print("Using {} device".format(device))

model = Model().to(device)

print(model)

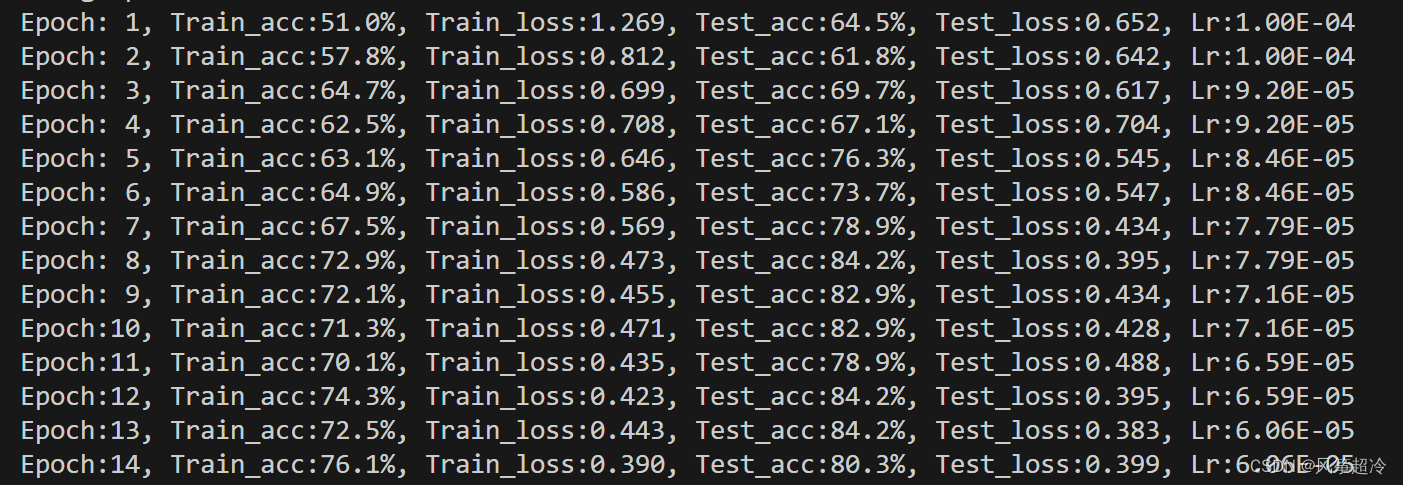

三、训练模型

1.动态学习率

def adjust_learning_rate(optimizer, epoch, start_lr):

lr = start_lr * (0.92 ** (epoch // 2))

for param_group in optimizer.param_groups:

param_group['lr'] = lr

learn_rate = 1e-4 # 初始学习率

optimizer = torch.optim.SGD(model.parameters(), lr=learn_rate)

2.训练函数

# 训练循环

def train(dataloader, model, loss_fn, optimizer):

size = len(dataloader.dataset) # 训练集的大小

num_batches = len(dataloader) # 批次数目, (size/batch_size,向上取整)

train_loss, train_acc = 0, 0 # 初始化训练损失和正确率

for X, y in dataloader: # 获取图片及其标签

X, y = X.to(device), y.to(device)

# 计算预测误差

pred = model(X) # 网络输出

loss = loss_fn(pred, y) # 计算网络输出和真实值之间的差距,targets为真实值,计算二者差值即为损失

# 反向传播

optimizer.zero_grad() # grad属性归零

loss.backward() # 反向传播

optimizer.step() # 每一步自动更新

# 记录acc与loss

train_acc += (pred.argmax(1) == y).type(torch.float).sum().item()

train_loss += loss.item()

train_acc /= size

train_loss /= num_batches

return train_acc, train_loss3.测试函数

def test (dataloader, model, loss_fn):

size = len(dataloader.dataset) # 测试集的大小

num_batches = len(dataloader) # 批次数目, (size/batch_size,向上取整)

test_loss, test_acc = 0, 0

# 当不进行训练时,停止梯度更新,节省计算内存消耗

with torch.no_grad():

for imgs, target in dataloader:

imgs, target = imgs.to(device), target.to(device)

# 计算loss

target_pred = model(imgs)

loss = loss_fn(target_pred, target)

test_loss += loss.item()

test_acc += (target_pred.argmax(1) == target).type(torch.float).sum().item()

test_acc /= size

test_loss /= num_batches

return test_acc, test_loss4.正式训练

loss_fn = nn.CrossEntropyLoss() # 创建损失汉书

epochs = 50

train_loss = []

train_acc = []

test_loss = []

test_acc = []

for epoch in range(epochs):

# 更新学习率(使用自定义学习率使用)

adjust_learning_rate(optimizer, epoch, learn_rate)

model.train()

epoch_train_acc, epoch_train_loss = train(train_dl, model, loss_fn, optimizer)

model.eval()

epoch_test_acc, epoch_test_loss = test(test_dl, model, loss_fn)

train_acc.append(epoch_train_acc)

train_loss.append(epoch_train_loss)

test_acc.append(epoch_test_acc)

test_loss.append(epoch_test_loss)

# 获取当前的学习率

lr = optimizer.state_dict()['param_groups'][0]['lr']

template = ('Epoch:{:2d}, Train_acc:{:.1f}%, Train_loss:{:.3f}, Test_acc:{:.1f}%, Test_loss:{:.3f}, Lr:{:.2E}')

print(template.format(epoch + 1, epoch_train_acc * 100, epoch_train_loss,

epoch_test_acc * 100, epoch_test_loss, lr))

print('Done')

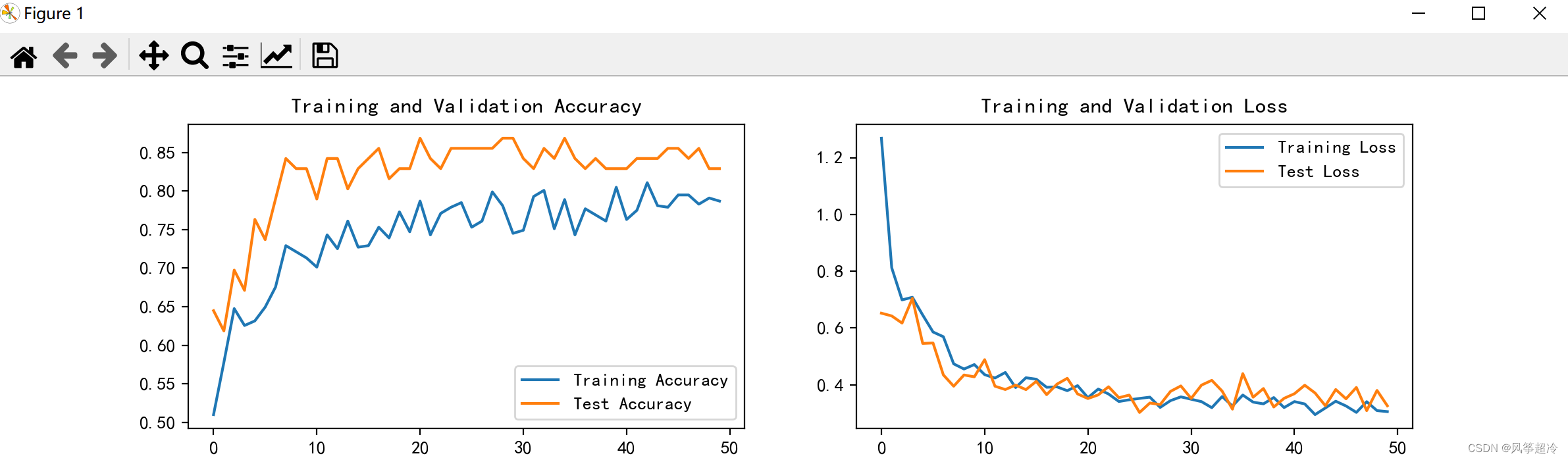

四、结果可视化

import matplotlib.pyplot as plt

# 隐藏警告

import warnings

warnings.filterwarnings("ignore") # 忽略警告信息

plt.rcParams['font.sans-serif'] = ['SimHei'] # 用来正常显示中文标签

plt.rcParams['axes.unicode_minus'] = False # 用来正常显示负号

plt.rcParams['figure.dpi'] = 100 # 分辨率

epochs_range = range(epochs)

plt.figure(figsize=(12, 3))

plt.subplot(1, 2, 1)

plt.plot(epochs_range, train_acc, label='Training Accuracy')

plt.plot(epochs_range, test_acc, label='Test Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')

plt.subplot(1, 2, 2)

plt.plot(epochs_range, train_loss, label='Training Loss')

plt.plot(epochs_range, test_loss, label='Test Loss')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

plt.show()

五、完整代码

import torch

import matplotlib.pyplot as plt

from torchvision import transforms, datasets

import os, PIL, random, pathlib

data_dir = r'D:\P5-data\test'

data_dir = pathlib.Path(data_dir)

data_paths = list(data_dir.glob('*'))

classNames = [str(path).split("\\")[3]for path in data_paths]

print(classNames)

train_transforms = transforms.Compose([

transforms.Resize([224, 224]),

transforms.ToTensor(),

transforms.Normalize(

mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

])

test_transform = transforms.Compose([

transforms.Resize([224, 224]),

transforms.ToTensor(),

transforms.Normalize(

mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

])

train_dataset = datasets.ImageFolder("D:/P5-data/train/",transform=train_transforms)

test_dataset = datasets.ImageFolder("D:/P5-data/test/",transform=train_transforms)

batch_size = 32

train_dl = torch.utils.data.DataLoader(train_dataset,

batch_size=batch_size,

shuffle=True,

num_workers=0)

test_dl = torch.utils.data.DataLoader(test_dataset,

batch_size=batch_size,

shuffle=True,

num_workers=0)

import torch.nn as nn

import torch.nn.functional as F

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.conv1=nn.Sequential(

nn.Conv2d(3, 12, kernel_size=5, padding=0),

nn.BatchNorm2d(12),

nn.ReLU()

)

self.conv2=nn.Sequential(

nn.Conv2d(12, 12, kernel_size=5, padding=0),

nn.BatchNorm2d(12),

nn.ReLU()

)

self.pool3=nn.Sequential(

nn.MaxPool2d(2)

)

self.conv4=nn.Sequential(

nn.Conv2d(12, 24, kernel_size=5, padding=0),

nn.BatchNorm2d(24),

nn.ReLU()

)

self.conv5=nn.Sequential(

nn.Conv2d(24, 24, kernel_size=5, padding=0),

nn.BatchNorm2d(24),

nn.ReLU()

)

self.pool6=nn.Sequential(

nn.MaxPool2d(2)

)

self.dropout=nn.Sequential(

nn.Dropout(0.5)

)

self.fc=nn.Sequential(

nn.Linear(24*50*50, len(classNames)),

nn.Dropout(0.3)

)

def forward(self, x):

batch_size = x.size(0)

x = self.conv1(x)

x = self.conv2(x)

x = self.pool3(x)

x = self.conv4(x)

x = self.conv5(x)

x = self.pool6(x)

x = self.dropout(x)

# print(x.shape)

x = x.view(batch_size, -1) # flatten 编程全连接网络需要的输入(batch, 24*50*50_4

x = self.fc(x)

x = self.dropout(x)

return x

device = "cuda" if torch.cuda.is_available() else "cpu"

print("Using {} device".format(device))

model = Model().to(device)

def adjust_learning_rate(optimizer, epoch, start_lr):

# 每2个epoch衰减到原来的0.92

lr = start_lr * (0.92 ** (epoch // 2))

for param_group in optimizer.param_groups:

param_group['lr'] = lr

learn_rate = 1e-4 # 初始学习率

optimizer = torch.optim.Adam(model.parameters(), lr=learn_rate)

# 训练循环

def train(dataloader, model, loss_fn, optimizer):

size = len(dataloader.dataset) # 训练集的大小

num_batches = len(dataloader) # 批次数目, (size/batch_size,向上取整)

train_loss, train_acc = 0, 0 # 初始化训练损失和正确率

for X, y in dataloader: # 获取图片及其标签

X, y = X.to(device), y.to(device)

# 计算预测误差

pred = model(X) # 网络输出

loss = loss_fn(pred, y) # 计算网络输出和真实值之间的差距,targets为真实值,计算二者差值即为损失

# 反向传播

optimizer.zero_grad() # grad属性归零

loss.backward() # 反向传播

optimizer.step() # 每一步自动更新

# 记录acc与loss

train_acc += (pred.argmax(1) == y).type(torch.float).sum().item()

train_loss += loss.item()

train_acc /= size

train_loss /= num_batches

return train_acc, train_loss

def test (dataloader, model, loss_fn):

size = len(dataloader.dataset) # 测试集的大小

num_batches = len(dataloader) # 批次数目, (size/batch_size,向上取整)

test_loss, test_acc = 0, 0

# 当不进行训练时,停止梯度更新,节省计算内存消耗

with torch.no_grad():

for imgs, target in dataloader:

imgs, target = imgs.to(device), target.to(device)

# 计算loss

target_pred = model(imgs)

loss = loss_fn(target_pred, target)

test_loss += loss.item()

test_acc += (target_pred.argmax(1) == target).type(torch.float).sum().item()

test_acc /= size

test_loss /= num_batches

return test_acc, test_loss

loss_fn = nn.CrossEntropyLoss() # 创建损失汉书

epochs = 50

train_loss = []

train_acc = []

test_loss = []

test_acc = []

for epoch in range(epochs):

# 更新学习率(使用自定义学习率使用)

adjust_learning_rate(optimizer, epoch, learn_rate)

model.train()

epoch_train_acc, epoch_train_loss = train(train_dl, model, loss_fn, optimizer)

model.eval()

epoch_test_acc, epoch_test_loss = test(test_dl, model, loss_fn)

train_acc.append(epoch_train_acc)

train_loss.append(epoch_train_loss)

test_acc.append(epoch_test_acc)

test_loss.append(epoch_test_loss)

# 获取当前的学习率

lr = optimizer.state_dict()['param_groups'][0]['lr']

template = ('Epoch:{:2d}, Train_acc:{:.1f}%, Train_loss:{:.3f}, Test_acc:{:.1f}%, Test_loss:{:.3f}, Lr:{:.2E}')

print(template.format(epoch + 1, epoch_train_acc * 100, epoch_train_loss,

epoch_test_acc * 100, epoch_test_loss, lr))

print('Done')

import matplotlib.pyplot as plt

# 隐藏警告

import warnings

warnings.filterwarnings("ignore") # 忽略警告信息

plt.rcParams['font.sans-serif'] = ['SimHei'] # 用来正常显示中文标签

plt.rcParams['axes.unicode_minus'] = False # 用来正常显示负号

plt.rcParams['figure.dpi'] = 100 # 分辨率

epochs_range = range(epochs)

plt.figure(figsize=(12, 3))

plt.subplot(1, 2, 1)

plt.plot(epochs_range, train_acc, label='Training Accuracy')

plt.plot(epochs_range, test_acc, label='Test Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')

plt.subplot(1, 2, 2)

plt.plot(epochs_range, train_loss, label='Training Loss')

plt.plot(epochs_range, test_loss, label='Test Loss')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

plt.show()